Evil Operation: Breaking Speaker Recognition with PaddingBack

Why we named it PaddingBack: Padding Backdoor Attack

Abstract: Machine Learning as a Service (MLaaS) has gained popularity due to advancements in machine learning. However, untrusted third-party platforms have raised concerns about AI security, particularly in backdoor attacks. Recent research has shown that speech backdoors can utilize transformations as triggers, similar to image backdoors. However, human ears easily detect these transformations, leading to suspicion. In this paper, we introduce PaddingBack, an inaudible backdoor attack that utilizes malicious operations to make poisoned samples indistinguishable from clean ones. Instead of using external perturbations as triggers, we exploit the widely used speech signal operation, padding, to break speaker recognition systems. Our experimental results demonstrate the effectiveness of the proposed approach, achieving a significantly high attack success rate while maintaining a high rate of benign accuracy. Moreover, PaddingBack is capable of resisting defense methods.

Index terms: MLaaS, SRSs, backdoor attacks, PaddingBack

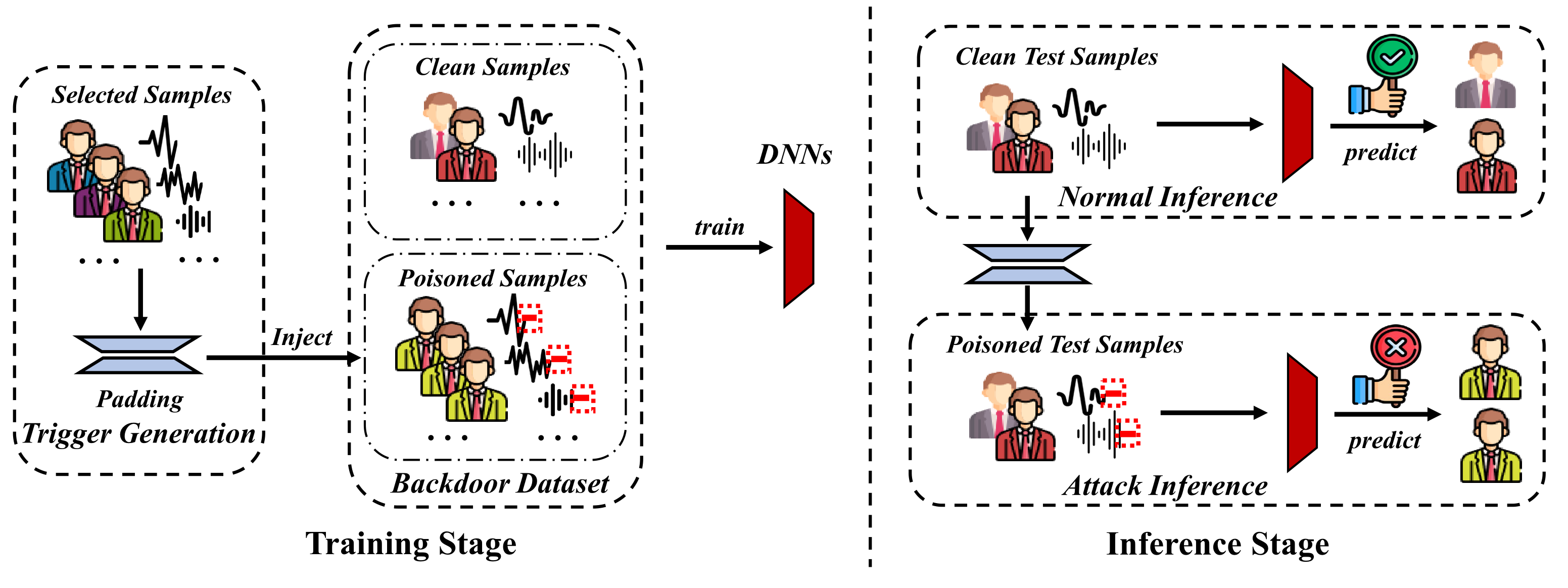

Framework

Shirts with different colors indicate different speakers, and the yellow shirt is the label specified by the adversary. During the training phase, adversaries randomly select $\rho\%$ samples to generate poisoned samples by adding triggers and changing their labels to those specified by the adversary. Then the poisoned and remaining samples are combined to create a backdoor dataset for the victim to train the model. During the inference phase, the adversary can activate model backdoors by padding a specific length, causing model predictions to be manipulated towards the adversary-specified label. Additionally, any clean samples will still be correctly classified as ground-truth labels.

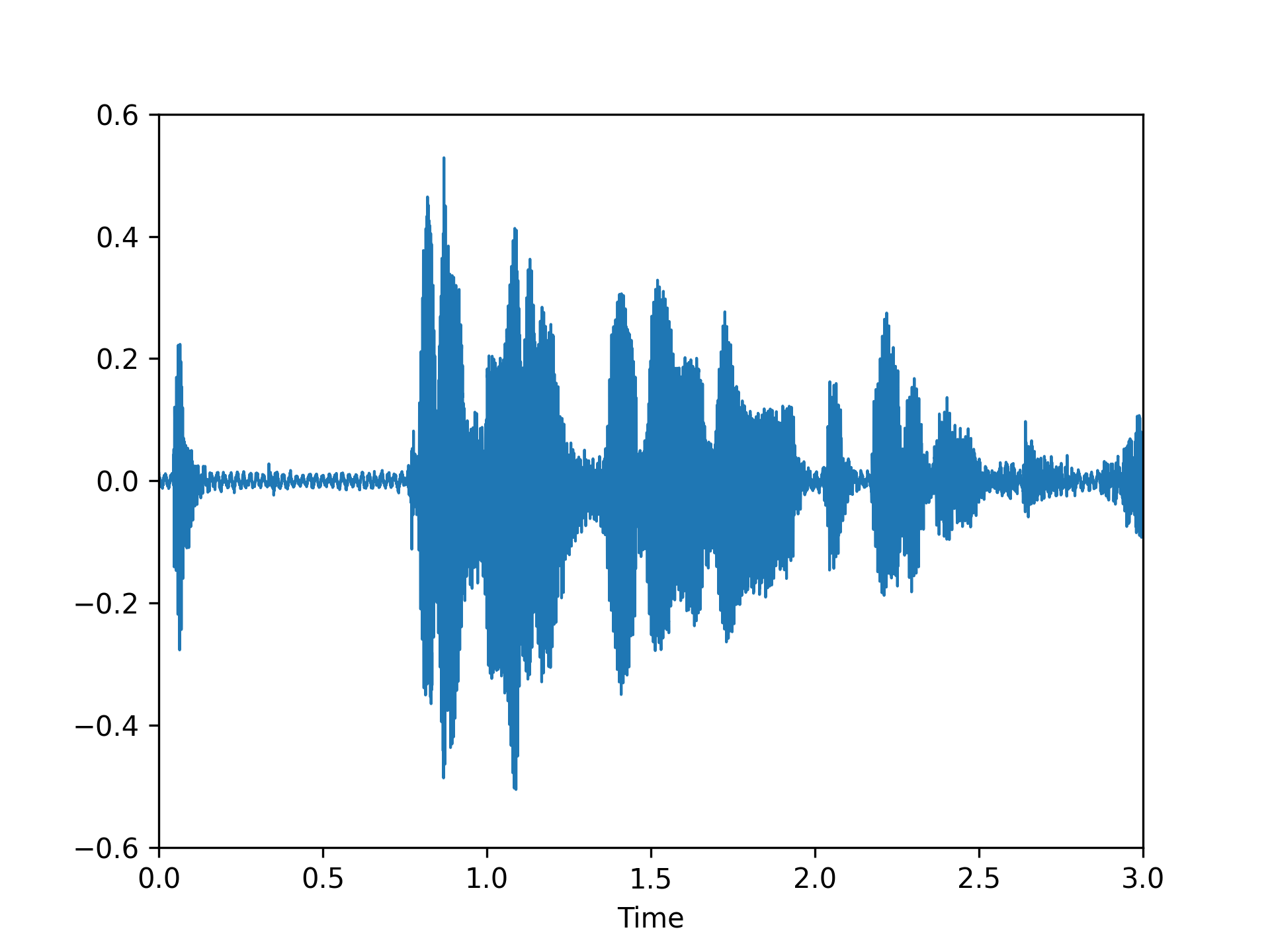

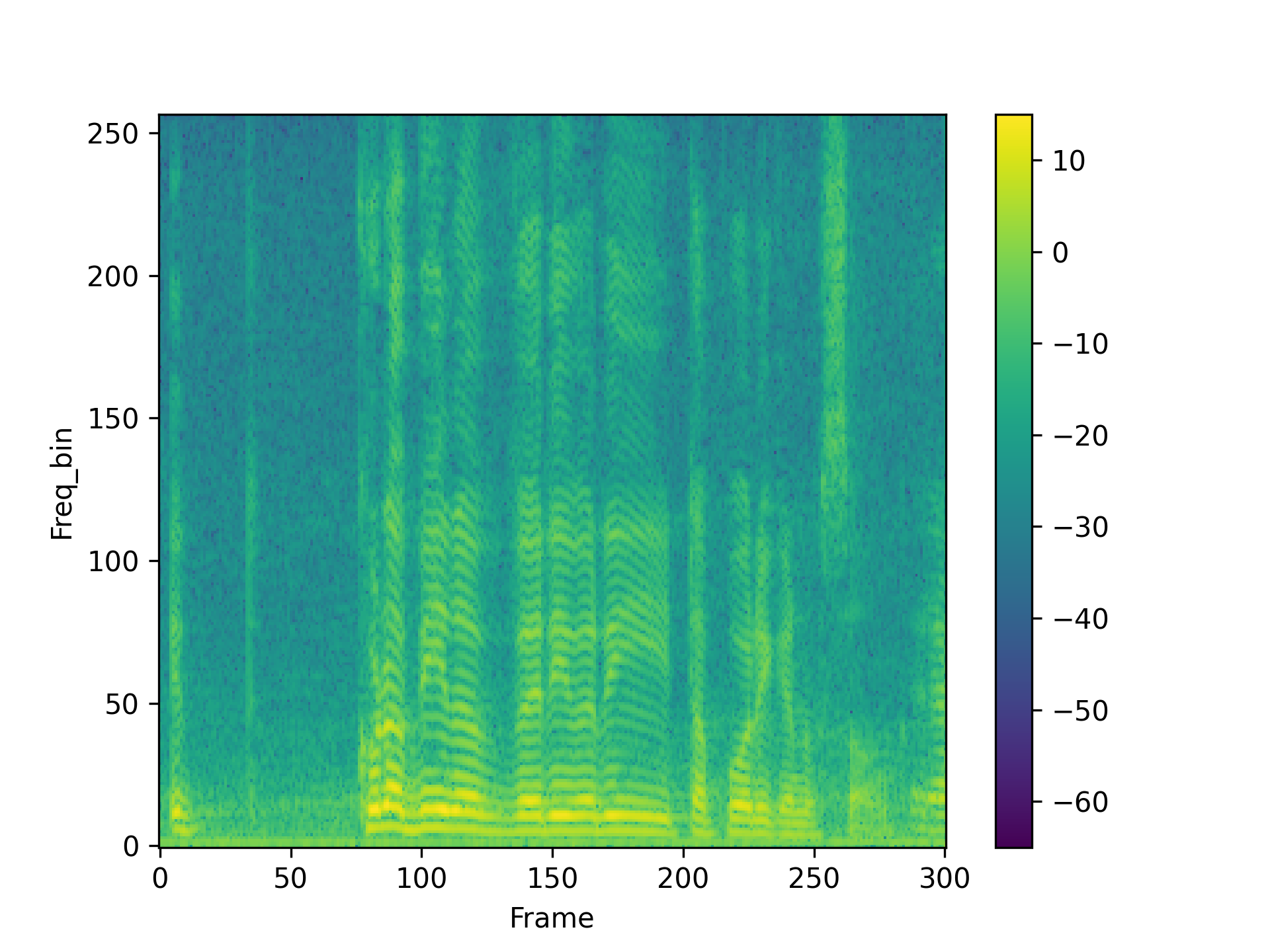

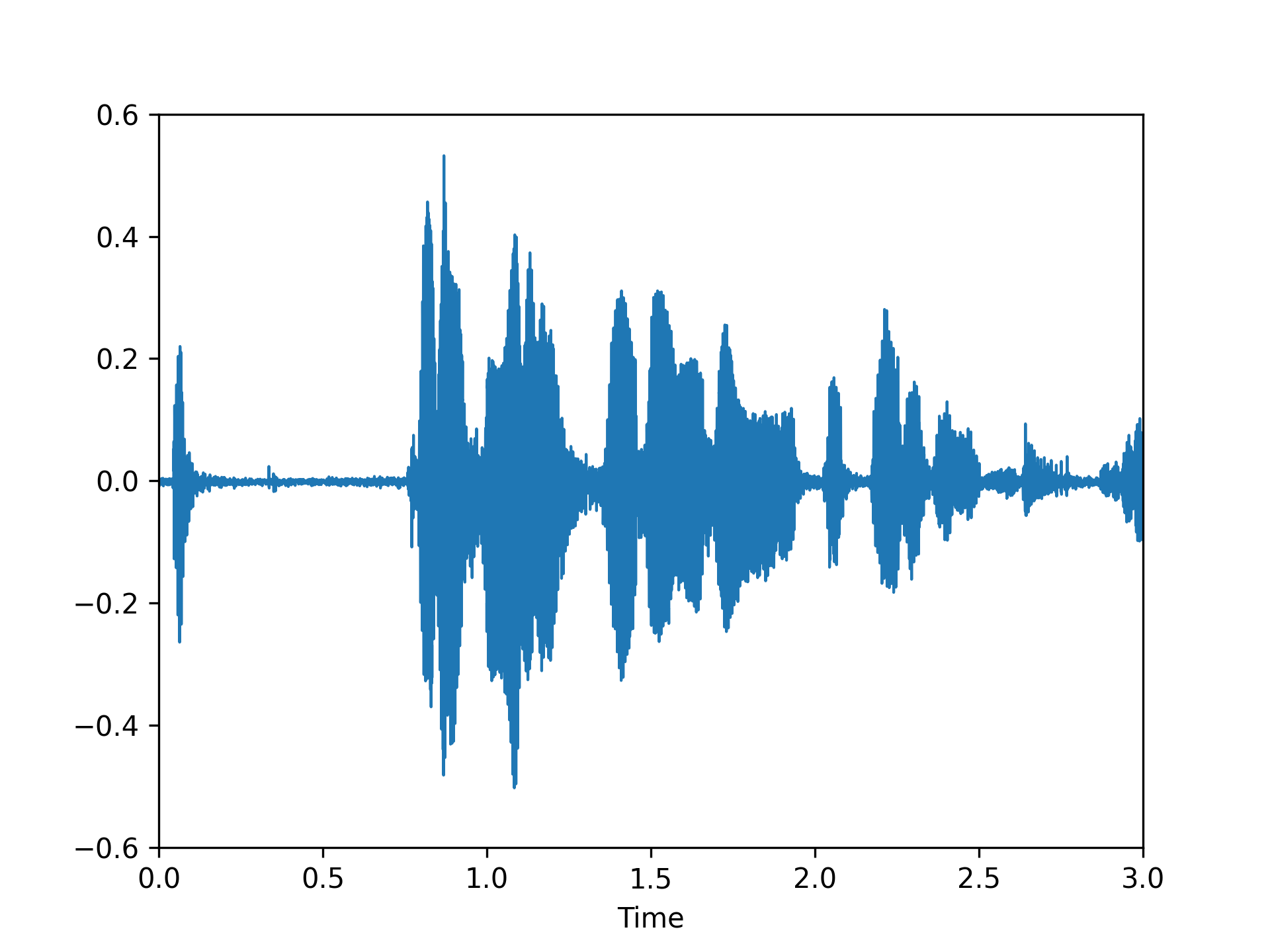

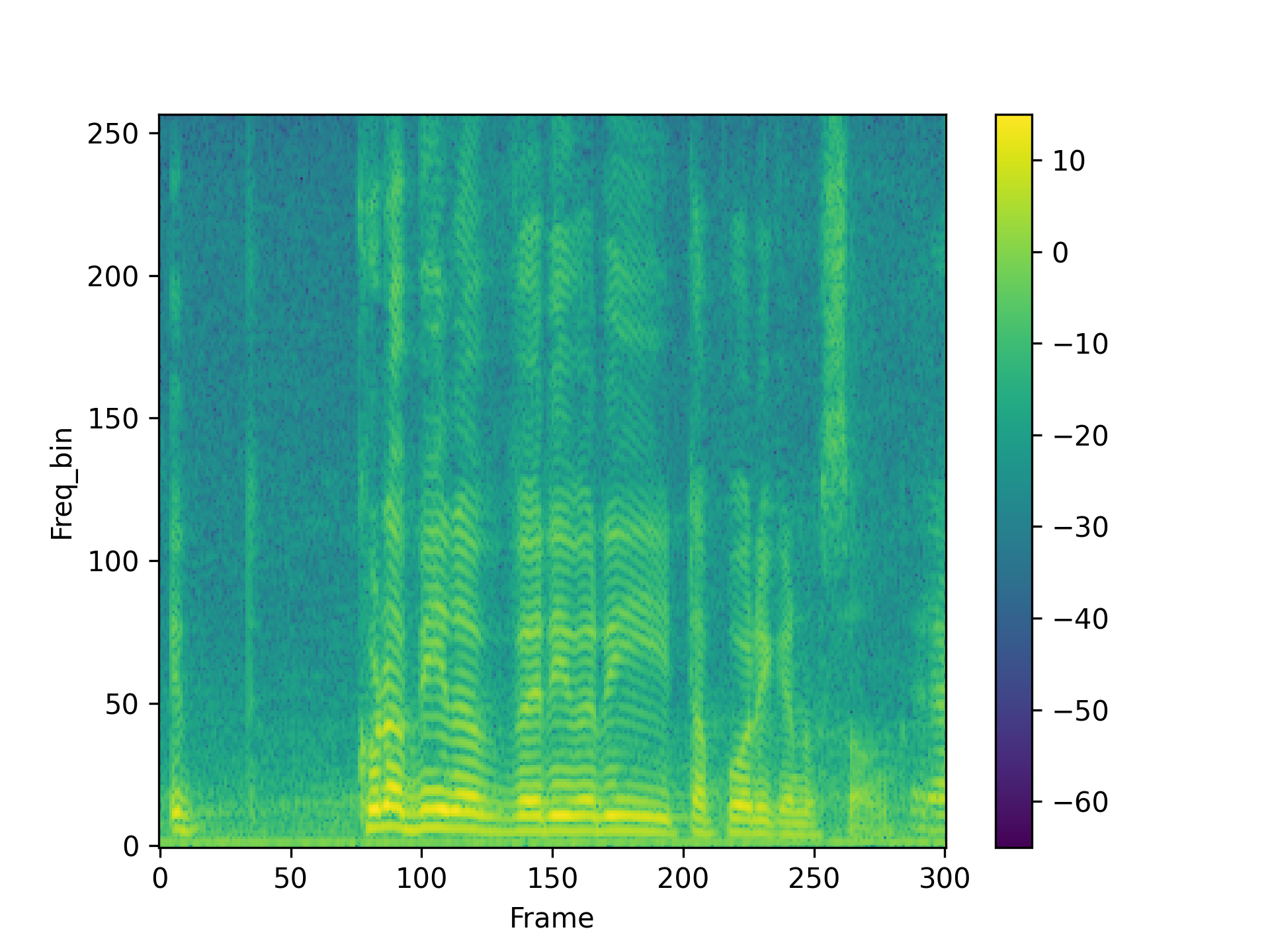

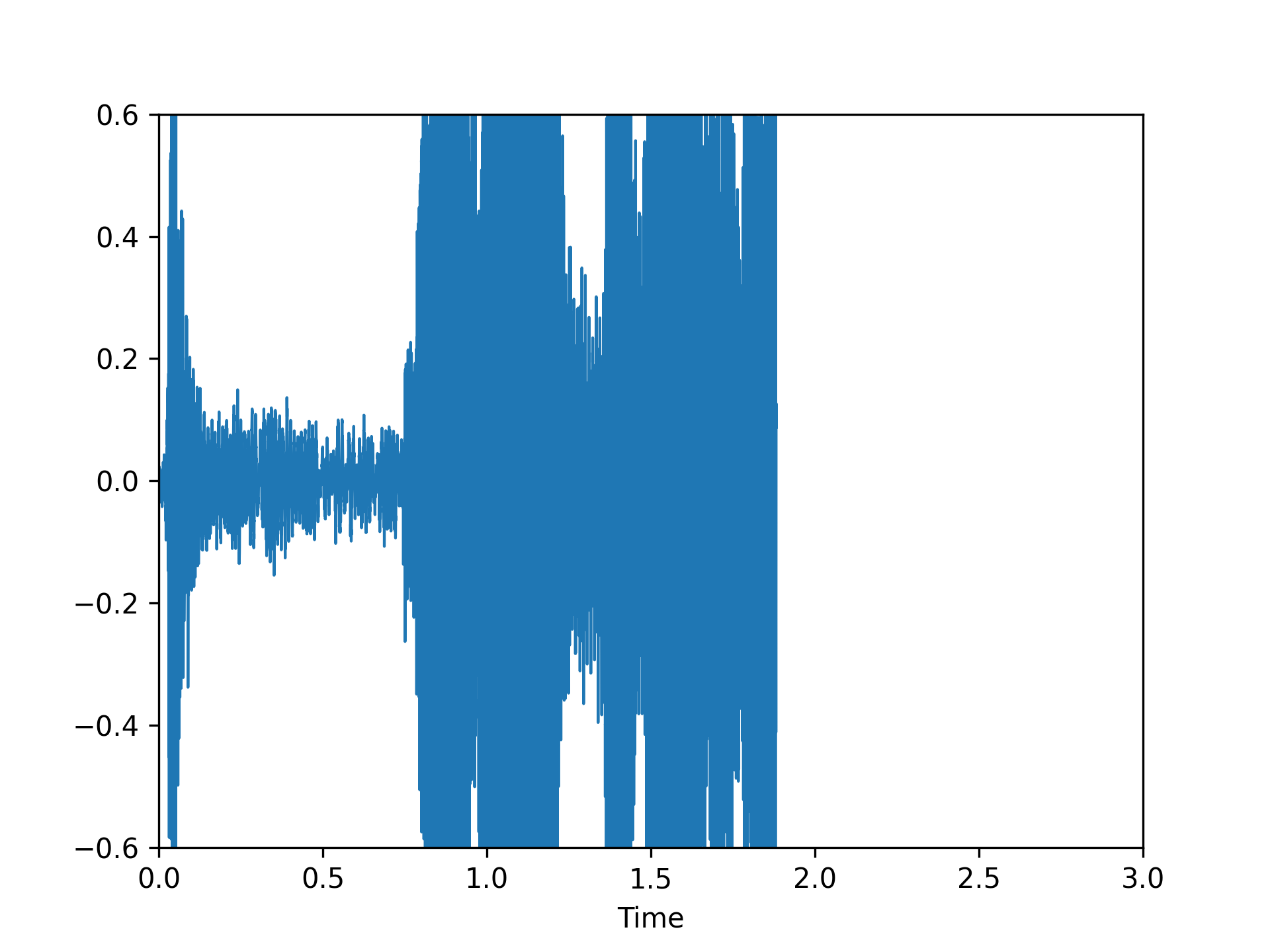

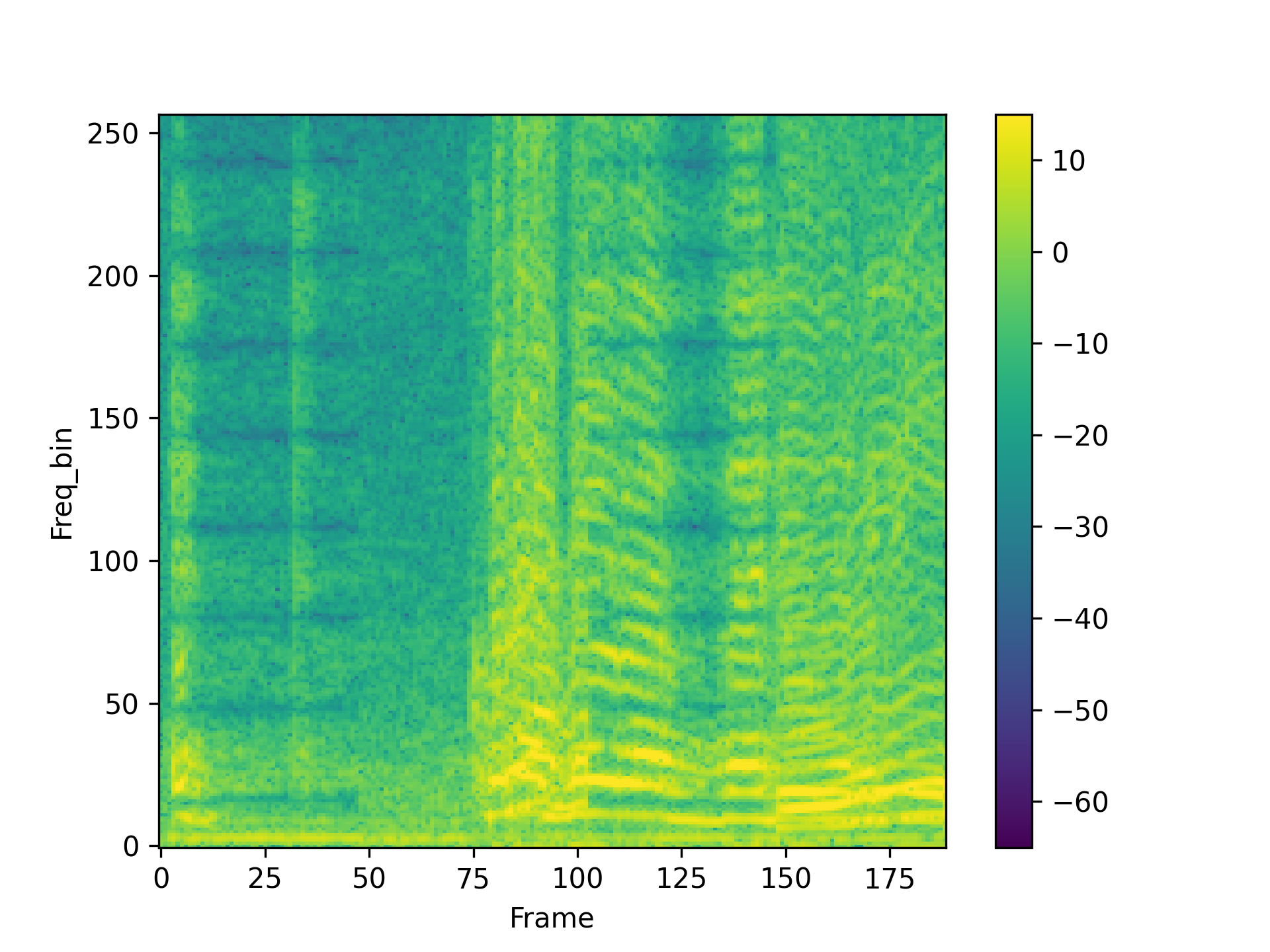

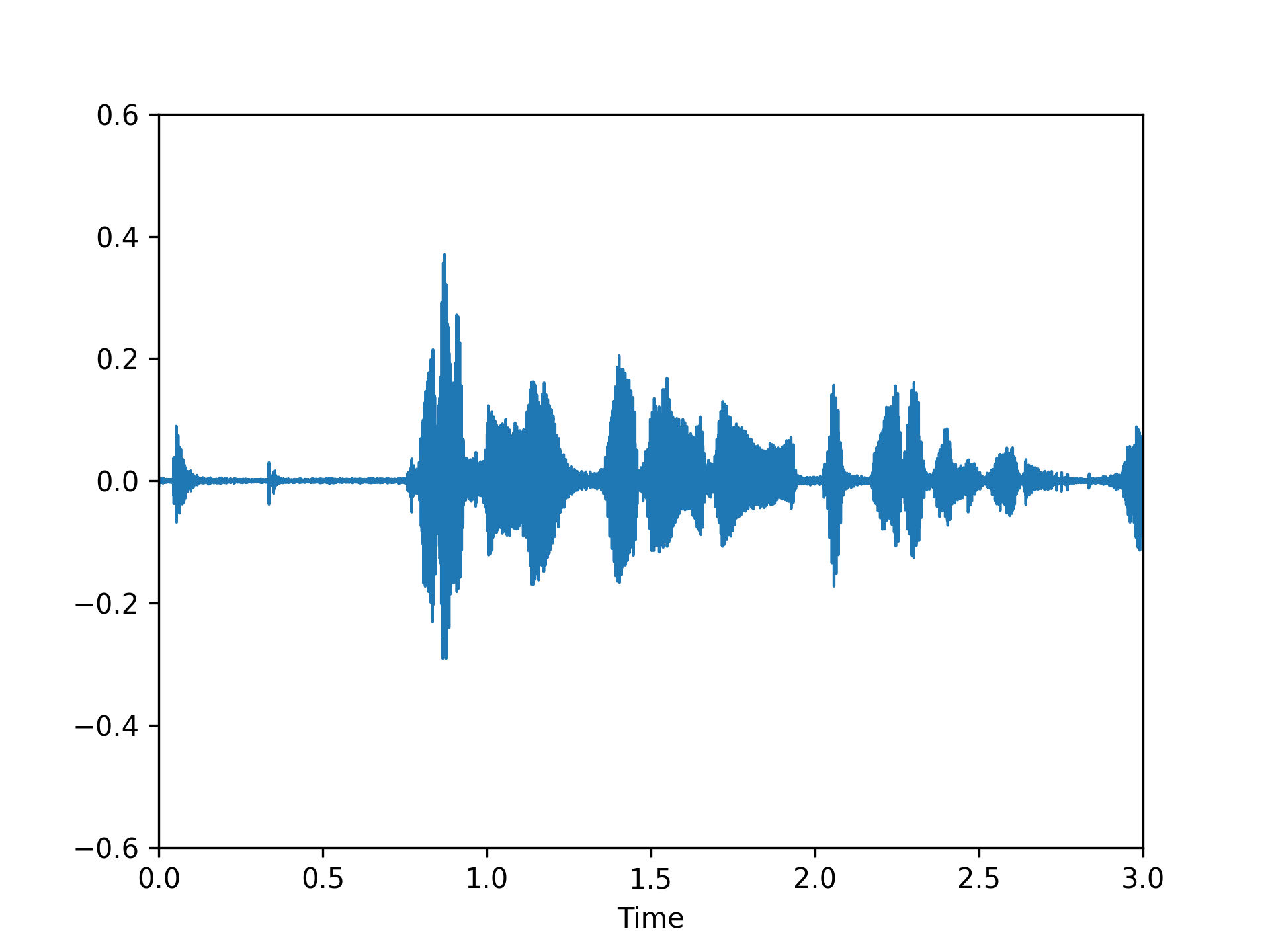

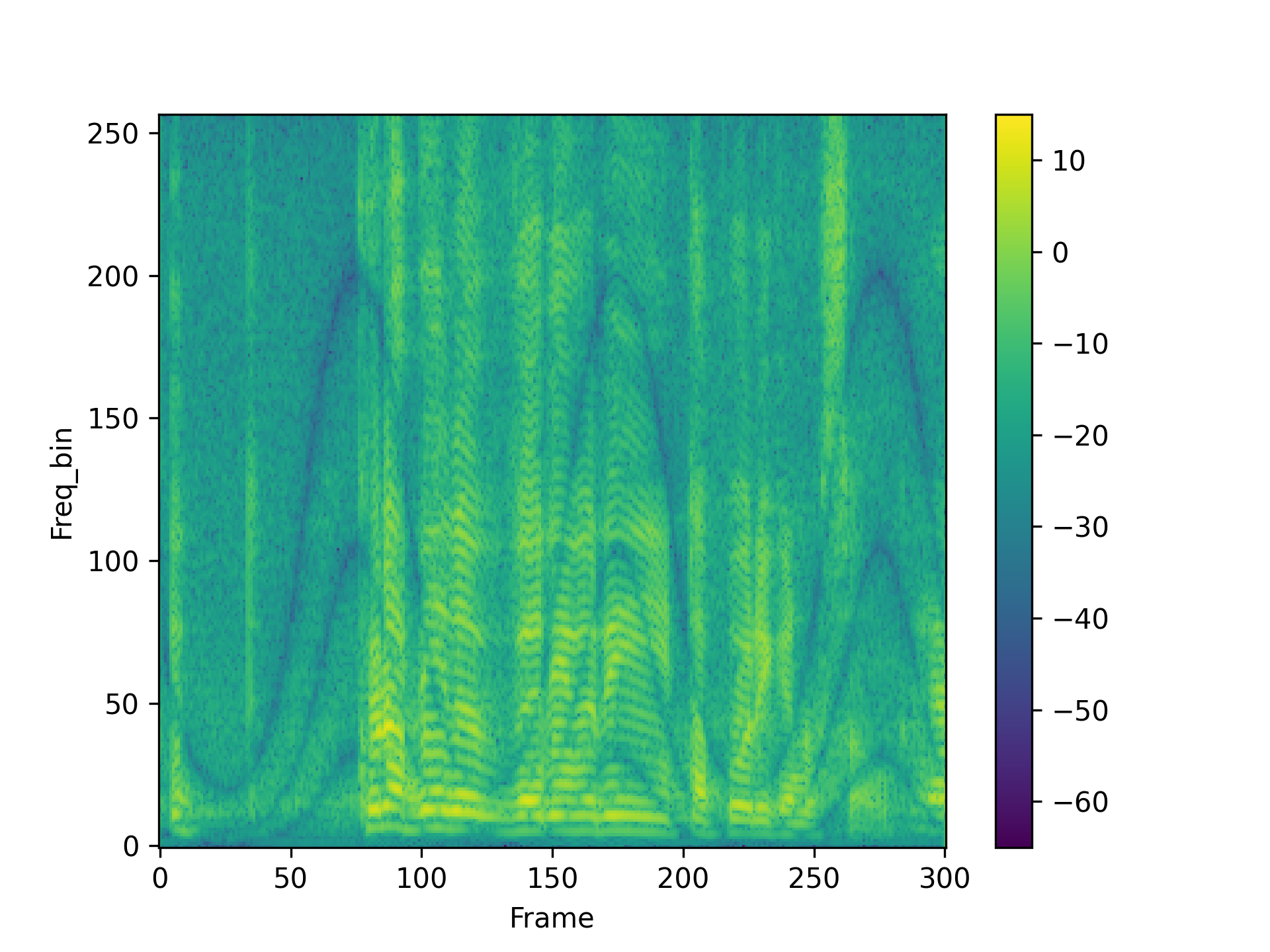

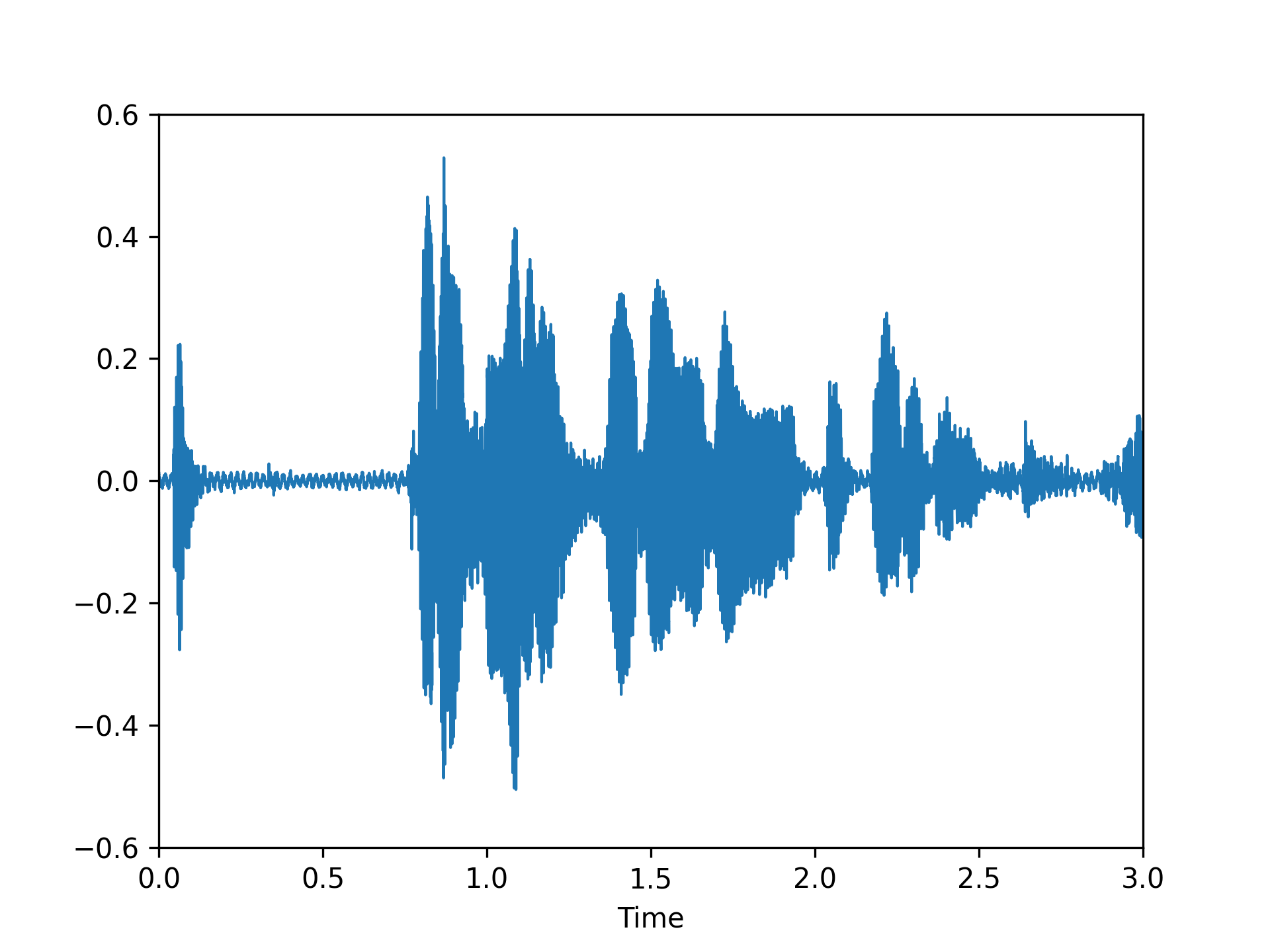

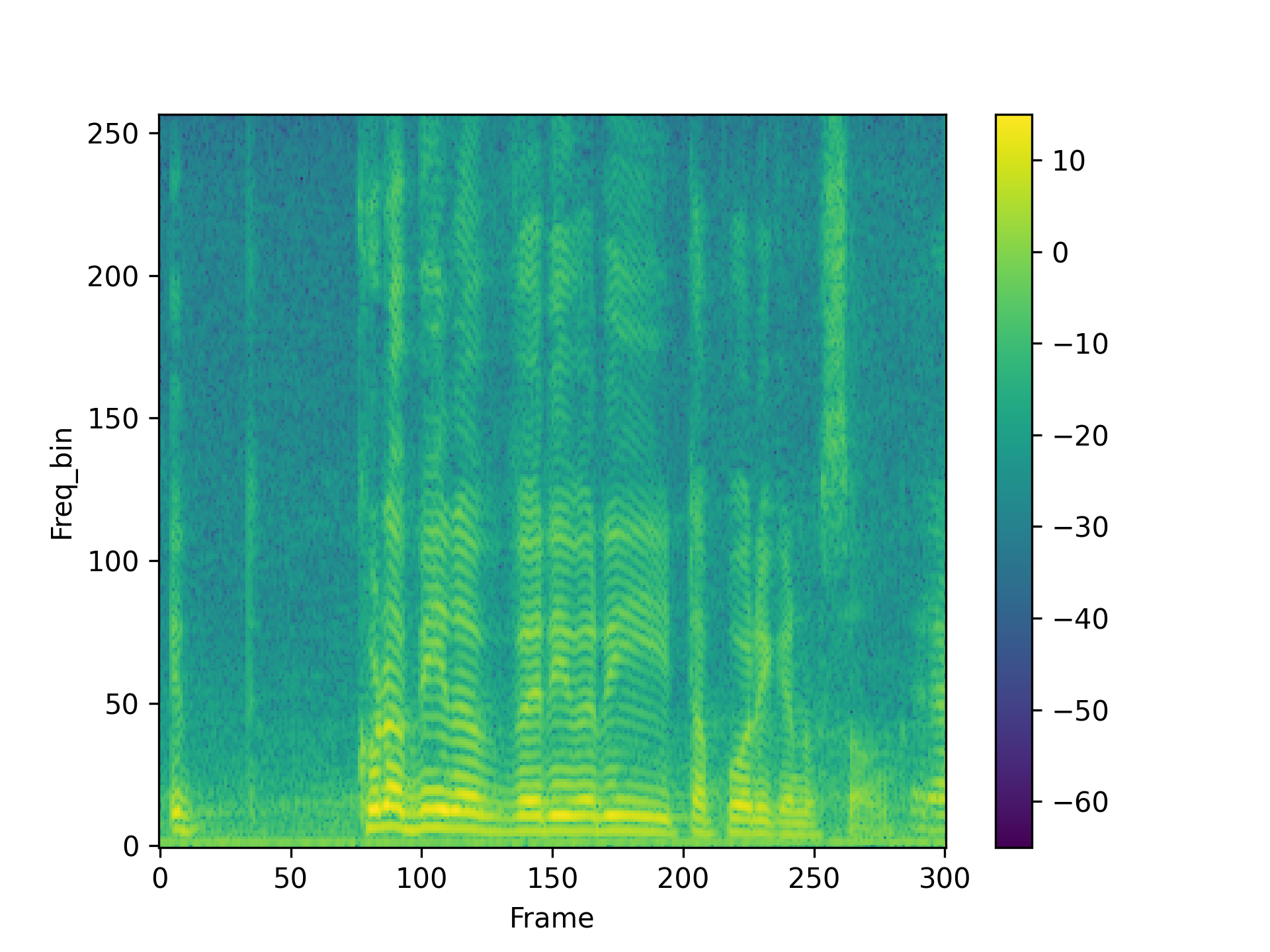

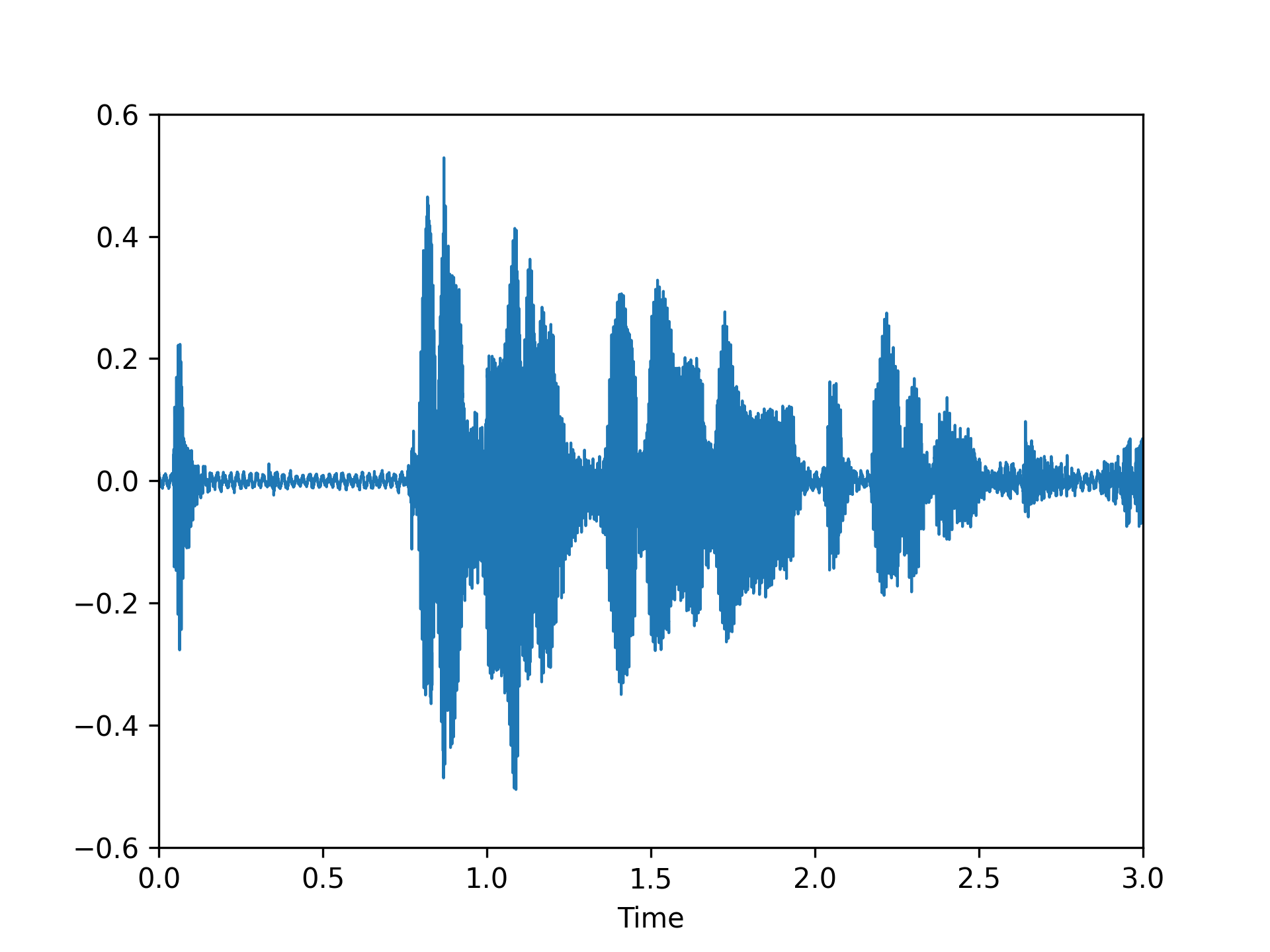

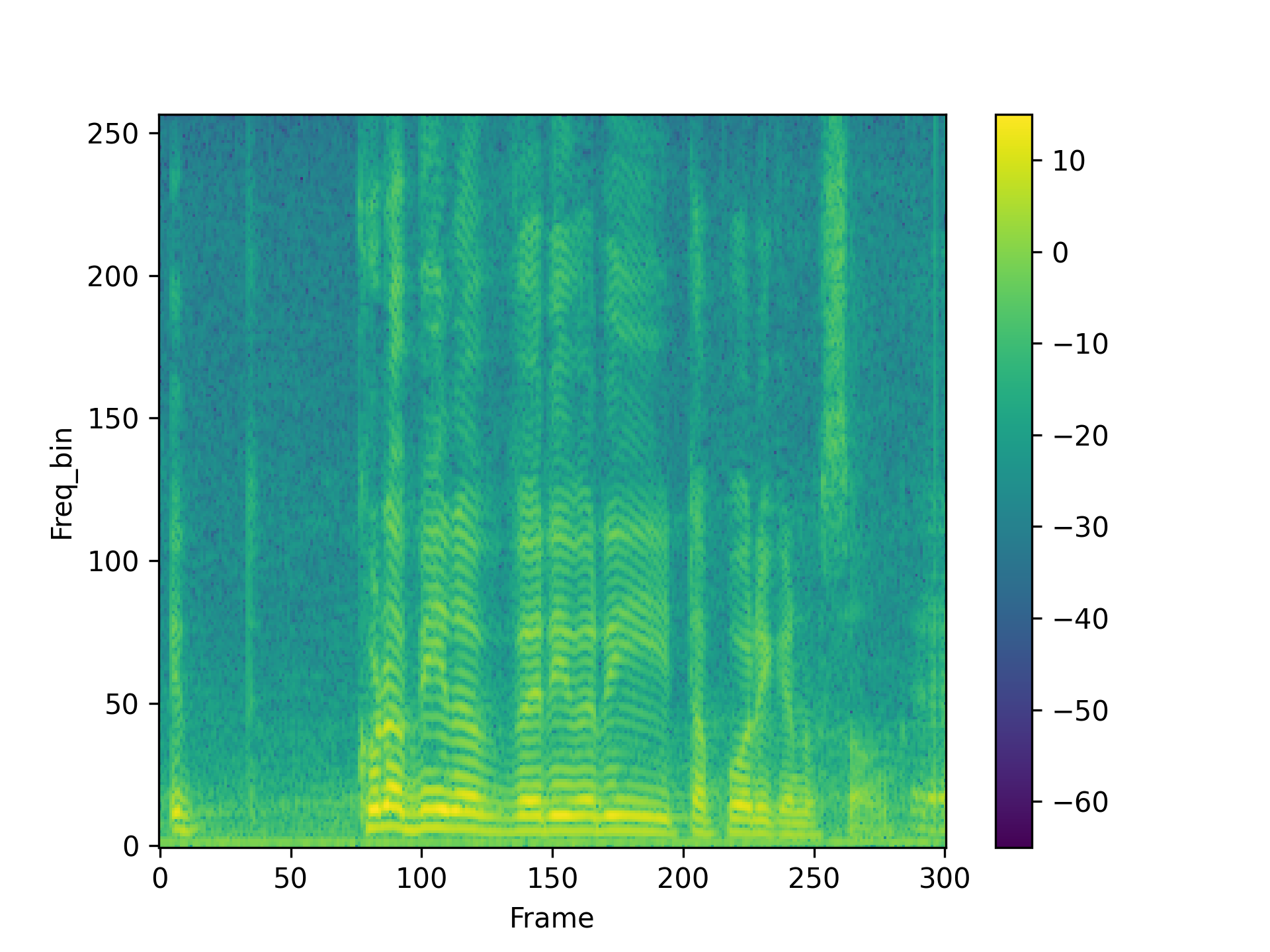

Stealthiness Experiment: Poisoned Speech Visualization

We visualized a comparison between our method and existing speech backdoor attack:

Clean |

PhaseBack |

Style 3 |

Style 5 |

PaddingBack-C |

PaddingBack-W |

According to both the visualization results, we can observe that:

- From the visualized results, it can be observed that the previous operation-based speech backdoor attack methods lacked sufficient stealthiness, resulting in noticeable distortion of the poisoned samples.

- Our proposed method, as depicted by the visualizations, renders the poisoned speech nearly indistinguishable from the original speech, showcasing a high degree of stealthiness.

Stealthiness Experiment: Subjective Auditory Test

| Speech | id10002\C7k7C- PDvAA\00002.wav |

id11243\6-oQl SgLiFw\00004.wav |

id10059\8UMex HeqYH0\00001.wav |

54-121080-0001 | 439-123866-0009.wav |

| Original sample |

|

|

|

|

|

| PhaseBack |

|

|

|

|

|

| Style 3 |

|

|

|

|

|

| Style 5 |

|

|

|

|

|

| PaddingBack-C |

|

|

|

|

|

| PaddingBack-W |

|

|

|

|

|

According to subjective auditory test, we can observe that:

- Previous operation-based speech backdoor attack methods will lead to noticeable degradation in the poisoned audio, making them susceptible to human auditory detection and posing a risk of exposure.

- Our proposed operation-based speech backdoor attack method, renders the poisoned audio nearly indistinguishable from the original audio. Similar to PhaseBack, it can be considered an inaudible backdoor attack strategy.